VGG

能不能更深更大获得更好的精度

更多的全连接层

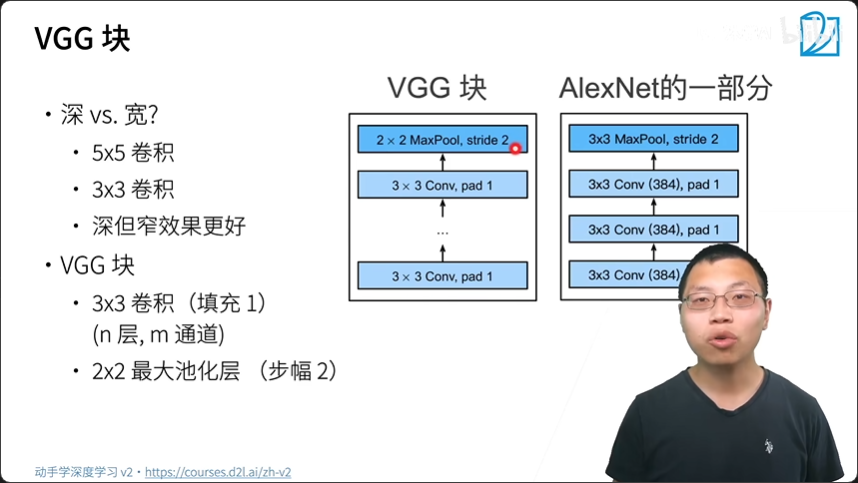

更多的卷积层

将卷积层组合成块

深但窄效果更好

多个VGG块后接全连接层

不同次数的重复块得到不同的架构

VGG-16,VGG-19

更大更深的AlexNet

非常的占内存

import torch

from torch import nn

from d2l import torch as d2l

# VGG块的实现

def vgg_block(num_convs, in_channels, out_channels):

layers = []

for _ in range(num_convs):

layers.append(nn.Conv2d(in_channels, out_channels,

kernel_size=3, padding=1))

layers.append(nn.ReLU())

in_channels = out_channels

layers.append(nn.MaxPool2d(kernel_size=2,stride=2))

return nn.Sequential(*layers)conv_arch = ((1, 64), (1, 128), (2, 256), (2, 512), (2, 512))

conv_blks = []

in_channels = 1

# 卷积层部分

for (num_convs, out_channels) in conv_arch:

conv_blks.append(vgg_block(num_convs, in_channels, out_channels))

in_channels = out_channels

return nn.Sequential(

*conv_blks, nn.Flatten(),

# 全连接层部分

nn.Linear(out_channels * 7 * 7, 4096), nn.ReLU(), nn.Dropout(0.5),

nn.Linear(4096, 4096), nn.ReLU(), nn.Dropout(0.5),

nn.Linear(4096, 10))

net = vgg(conv_arch)